Automated Misinformation at Scale

- infiniteloop

- Oct 24, 2025

- 3 min read

Updated: Nov 15, 2025

Misinformation is not a new thing, but today it spreads (way) more easily than before. GenAI models let everyone create text, images and videos in minutes instead of hours. They can translate content into multiple languages without human effort, then push it out across social media platforms. Researchers found that AI generated disinformation is more likely to go viral than conventional false content.

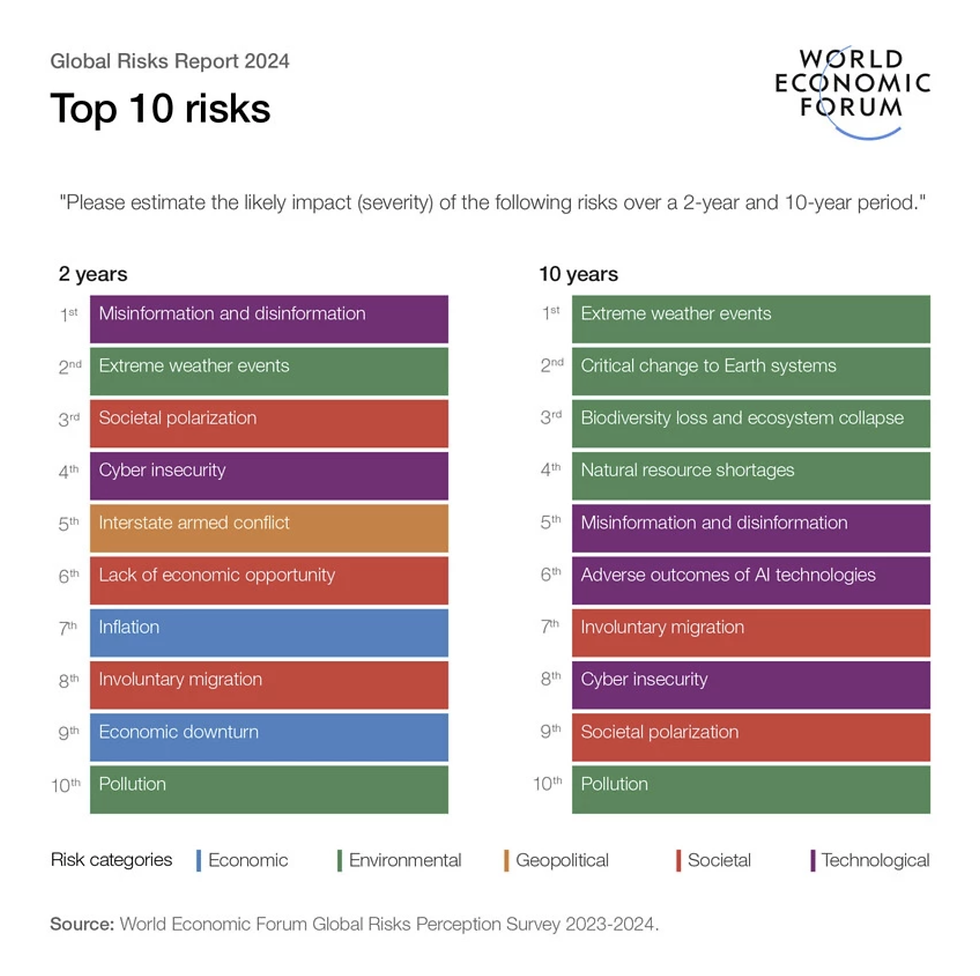

Analysis by the World Economic Forum (WEF) Global Risks Report 2024 found that 53% of experts ranked "AI generated misinformation and disinformation" as a top-tier short-term risk.

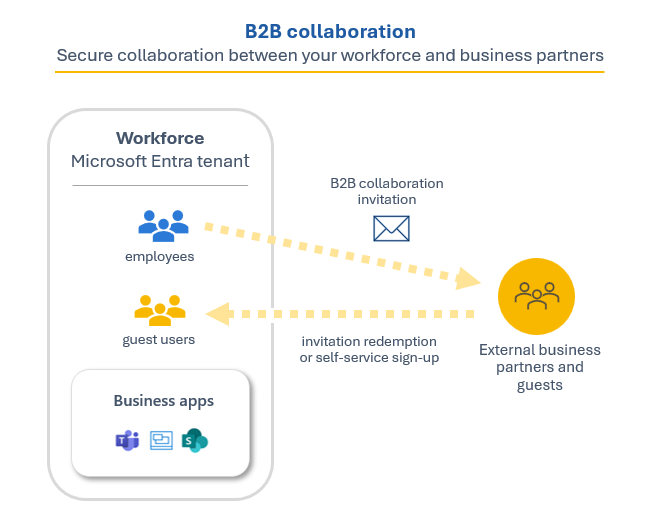

These systems were not initially built for influence. They came out of routine automation used to boost reach and localize content. Engagement bots, translation, and templated copy were the early uses. Public reports now show the same tooling inside covert campaigns. Platforms documented networks posting likely AI generated comments, translations, and packaged posts at scale. Once operators feed it fake news, the system pushes them out and repeats with hardly any supervision.

In early 2024, this amazing (really worth a read) research from Graphika and Stanford's Internet Observatory documented clusters of AI-generated content linked to geopolitical campaigns. These weren't traditional fake news pages or video deepfakes. They were language models posting continuously, translating their own work, and reacting to trending topics. The posts looked organic because they were built from genuine public data. The difference was the speed and density of production, tens of thousands of unique messages a day, each just different enough to evade duplication filters.

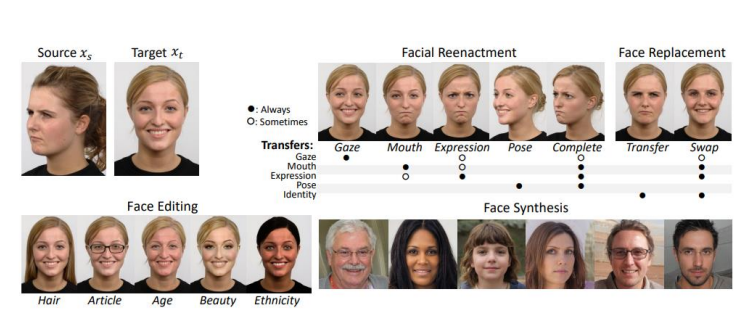

The most widely circulated outputs of this new generation of diffusion models were viral disinformation images. EDMO highlights several of the most cited examples, including the AI-generated photos of Pope Francis in a Balenciaga coat, Donald Trump's staged arrest, and a fabricated image of a Gazan father holding children.

In a August 2024 survey, 83.4% of U.S. adults said they were at least somewhat concerned that AI could be used to spread misinformation in the 2024 U.S. presidential election.

Meta and OpenAI later disclosed five covert influence operations across Russia, China, Iran, and Israel that used similar automation patterns. One of them, tied to the "Spamouflage" network, pushed more than 30,000 posts in less than three months across Facebook, X, Reddit, and Telegram. Each post was slightly rewritten by generative models to fit local language and tone. Around the same time, NATO StratCom confirmed that Russian propaganda outlets were already testing text generation tools to rewrite their daily narratives automatically.

According to the European Digital Media Observatory, roughly 80% of persistent misinformation narratives across social networks in early 2024 showed traces of automated rewriting or translation, clear evidence that human operators are already the minority and are almost not needed.

This makes detection harder. Older methods like metadata tracing or linguistic fingerprinting fail when every post is rewritten. GenAI systems can change syntax, tone, and vocabulary instantly, but the underlying narrative stays the same, which results in disinformation that looks native to each community it targets.

Statista's 2025 data visualizes how widespread public concern has become over AI's role in spreading false or misleading information.

Researchers now describe these AI networks as self optimizing ecosystems. Each model focuses on a narrow task like creating text, translating, or boosting visibility. Engagement metrics such as likes and shares guide the next wave of generated content. In Graphika's 2024 datasets, most of the high performing posts within certain campaigns came from AI generated replies instead of original human submissions.

In recent data, AI generated misinformation posts earned 8.19% more impressions, 20.54% more reposts and 49.42% more likes compared to human written equivalents.

Some governments and labs are already testing defensive models trained to spot this kind of activity. They analyze message semantics and emotional framing instead of keywords or phrases. In trials, these models can map coordinated narratives across languages by tracking patterns of emotion and causality. The same technology, in reverse, can also improve offensive operations. A model tuned to detect influence can easily be retrained to produce it more efficiently.

Better detectors push operators to change tactics. Rewriting text or re-rendering media removes many detectable traces. Provenance systems like C2PA work when everyone uses them, which is not the case. Model-from-output identification breaks under edits and translation. When commercial tools restrict use, open-source models fill the gap.

Scale is the next step. Automated pipelines keep content flowing at all hours, across multiple sites, with minimal human input. The activity often looks uncoordinated but constant, which complicates tracking and takedown.

References:

Comments